This app may have performance issues in Firefox. Try a chromium browser.

Full view of app and control panel here

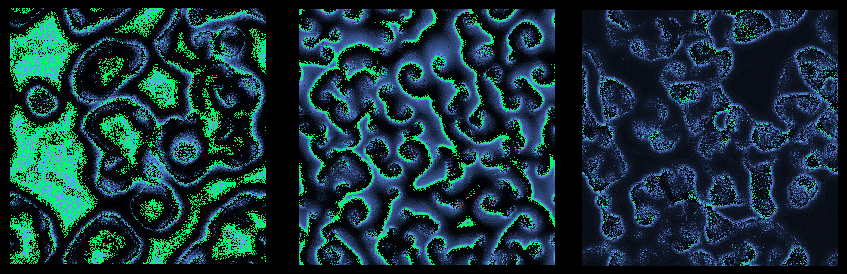

If this is what brain activity looks like, then how do algorithms fit into the picture?

In my research, I occasionally come back to this simulation because it is so provocative and mesmerizing. Nothing else I have made looks as clearly organic and alive. Yet, whereas other simulations focus on performance of tasks, this simulation does nothing useful on its own. It simply demonstrates a principle: that neurons, even in extremely simplified models, can produce a vast diversity of dynamical regimes, separated by small changes to a few parameters.

In the above simulation, you can watch and interact with the autonomous, self-organizing behavior of up to 1 million simple model neurons, roughly the scale of a few millimeters of cortical tissue. Each simulated neuron is simple and identical, signaling strongly to its immediate neighbors and weakly to all others. This global-local connectivity pattern produces a chaotic tension between small-scale organization and total synchronization. The global connections drive spontaneous activity in quiescent regions, while the local connections expand and organize that activity into waves, spirals, and other transient motifs. When local connections completely dominate, they trend toward a kind of entropy. When that entropy reaches its peak, global synchronization emerges once again, restarting the process.

Adjusting the parameters of the model (see the Sim Controls tab) modulates these behaviors in dramatic ways. Small increments to voltage decay, excitation, inhibition, and spike dropout probability can result in totally different regimes than that I just described.

Real cortical tissue exhibits very similar phenomena to this simulation and much, much more. Even the molecular mechanisms represented by the static parameters of this model are modulated by the electrochemical soup in which the neurons live. But how can we square this picture of the cortex with the circuit diagrams of computation? What kind of algorithm can concievably execute in this near-liquid environment of noise, interference, and chaos?

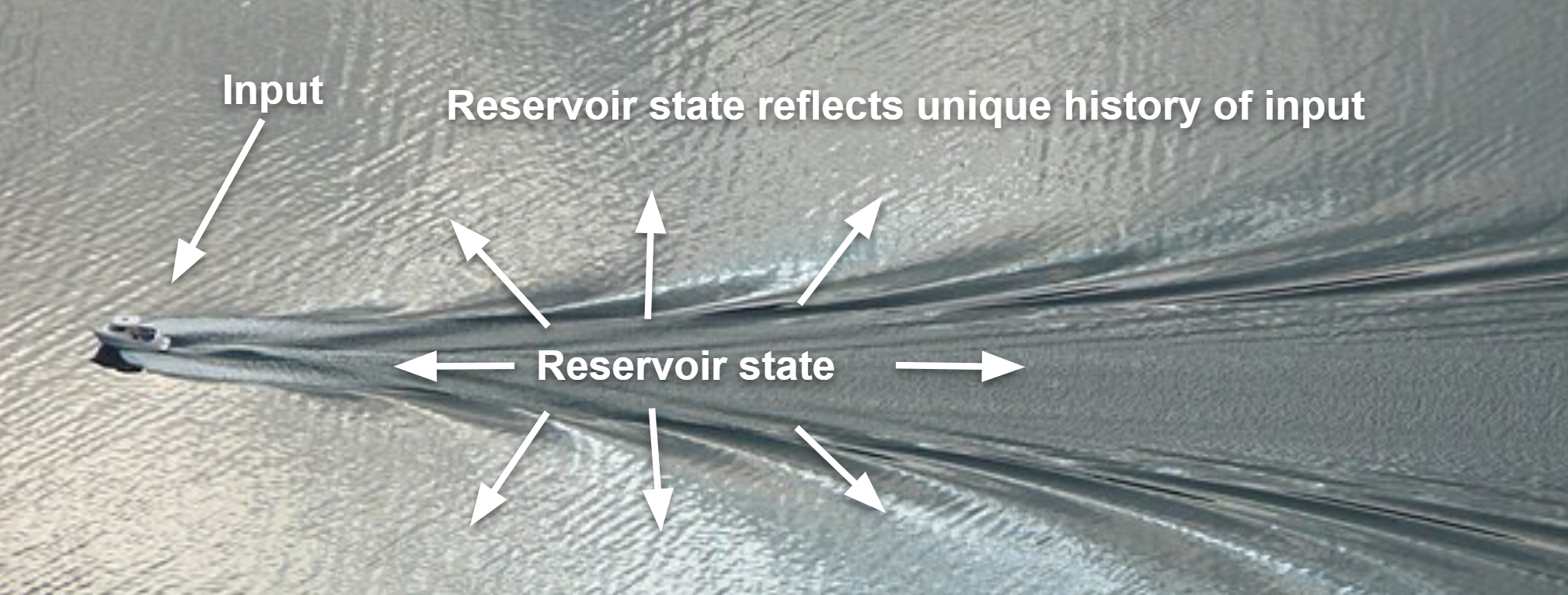

One answer is the principle of reservoir computing. In short, nonlinear dynamical systems with certain properties can be appropriated to perform both general computations and memory. Inputs are mapped to perturbations of some dynamical system, say, a randomly initialized recurrent neural network or a grid of cellular automata. As the system runs, those perturbations reverberate, transform, and combine, literally like ripples on the surface of water. The transformations and reverberations represent both a running history of the inputs and several a priori computations on that history. All that is needed to utilize the system is a trainable output decoder, even a simple feed-forward network, that maps the state of the dynamical system to its outputs. For AI purposes, those outputs tend to be one of predictions, classifications, or action policies.

Reservoir computing has many appealing qualities as a metaphor for cortical computation. First, it is the kind of computing solution that has a high likelihood in evolutionary logic: full of randomness, mechanistic redundancy, and functional diversity. Second, it simplifies the learning problem to one of feed-forward decoding, even linear decoding, rather than lengthy applications of the chain rule as in error backpropagation. Third, the reservoir can be nearly anything with a complex, persistent state. It does not constrain us to a certain kinds of recurrent network, physical medium, or even a specific domains of numbers. As a theoretical component of cortical computation, reservoir computing relieves the burden of assigning a specific algorithmic role to every neural mechanism or phenomenon, instead treating their collective dynamics as a rich substrate of possible computations.

The above simulation could in principle be incorporated into reinforcement learning agents and allow them, as with any RNN, to solve a wide class of POMDPs. In fact, it is mostly made up of recurrent Conv2D torch modules. There are some benefits to designing RL agents this way, including huge improvements in training efficiency. But it is potentially more valuable as a thought piece. It helps visualize the intractable richness of neural activity, the gap between engineering and evolution, and the possibility for forms of intelligence that we cannot yet imagine.